PROJECTS

PROJECTS

PROJECTS

Holding the Hyperscalers Accountable

Holding the Hyperscalers Accountable

Holding the Hyperscalers Accountable

Our investigations expose corner-cutting, push for accountability, and mobilize the public to ensure AI serves the common good.

Our investigations expose corner-cutting, push for accountability, and mobilize the public to ensure AI serves the common good.

Our investigations expose corner-cutting, push for accountability, and mobilize the public to ensure AI serves the common good.

Model Republic

January 2026

A new publication from The Midas Project.

Deeply researched analysis of the AI industry, policy moves, and the forces shaping the rules of artificial intelligence — delivered to your email.

Open Letter to OpenAI

Aug 2025

More than 100 prominent AI experts, former OpenAI team members, public figures, and civil society groups signed an open letter calling for greater transparency from OpenAI.

The OpenAI Files

June 2025

The OpenAI Files is the most comprehensive collection to date of documented concerns with governance practices, leadership integrity, and organizational culture at OpenAI.

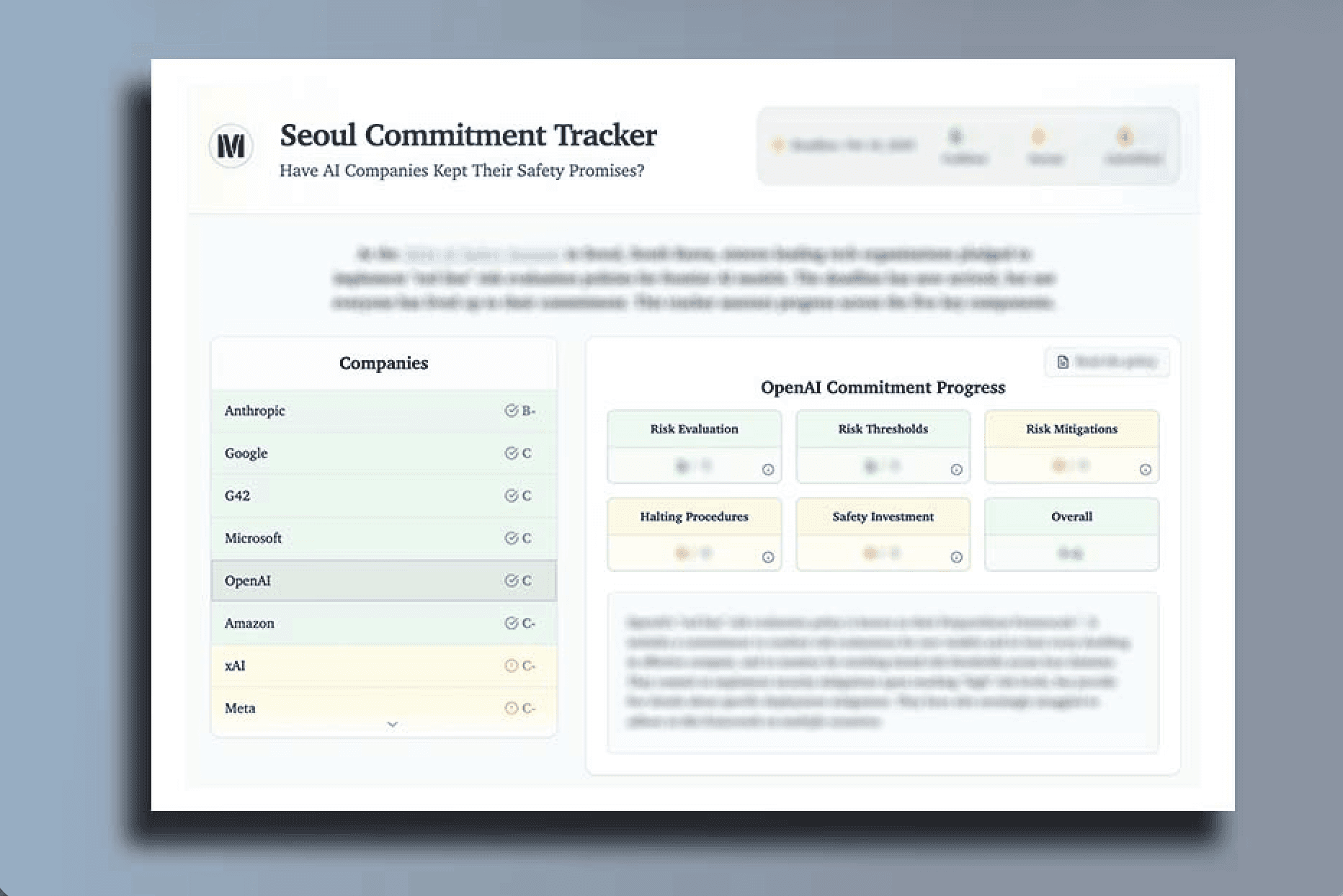

Seoul Tracker

Seoul Tracker

Feb 2025

Feb 25

At the 2024 AI Safety Summit in Seoul, South Korea, sixteen leading tech organizations pledged to implement "red line" risk evaluation policies for frontier AI models. The deadline has now arrived, but not everyone has lived up to their commitment. This tracker assesses progress across the five key components.

At the 2024 AI Safety Summit in Seoul, South Korea, sixteen leading tech organizations pledged to implement "red line" risk evaluation policies for frontier AI models. The deadline has now arrived, but not everyone has lived up to their commitment. This tracker assesses progress across the five key components.

Join our Movement

Encourage tech companies to prioritize safety, transparency, and public interest in AI development.

Join our Movement

Encourage tech companies to prioritize safety, transparency, and public interest in AI development.